AI is brilliant in the moment. It can write code, draft emails, and answer questions with stunning fluency. But when the moment ends, the memory fades. Every new session starts from a blank slate.

Your AI forgets.

It forgets the bug you fixed last week, the customer feedback from yesterday, and the unique context of your work. This isn't just an inconvenience—it's the fundamental barrier holding AI back from becoming true partners instead of tools.

Today, we're introducing the missing layer.

Papr is the memory infrastructure for AI. We give intelligent agents the ability to learn, recall, and build on context over time, transforming them from forgetful tools into genuine collaborators.

Papr is built with: MongoDB, Neo4j, Qdrant

The Scale Problem: When More Data Means Less Intelligence

As anyone who has deployed an AI agent knows, a fatal issue emerges at scale—what we call Retrieval Loss. It's the degradation of an AI system's ability to find relevant information as you add more data.

Here's what happens: You start with a few documents and chat logs, and your AI performs brilliantly. But as you scale—adding more conversations, more code repositories, more customer data—something breaks down. The signal gets lost in the noise. Your agent either fails to recall critical information or, worse, starts hallucinating connections that don't exist.

This is Retrieval Loss in action. The more context you provide, the less contextual your AI becomes. It's a cruel irony that has forced teams into a painful choice: limit your AI's knowledge or watch its performance degrade.

The industry's response has been to manually engineer context—a brittle, expensive, and ultimately unscalable band-aid solution.

We knew there had to be a better way.

Retrieval Loss: A Unified Metric for Memory System Performance

We created the retrieval loss formula to establish scaling laws for memory systems, similar to how Kaplan's 2020 paper revealed scaling laws for language models. Traditional retrieval systems were evaluated using disparate metrics that couldn't capture the full picture of real-world performance. We needed a single metric that jointly penalizes poor accuracy, high latency, and excessive cost—the three factors that determine whether a memory system is production-ready. This unified approach allows us to compare different architectures (vector databases, graph databases, memory frameworks) on equal footing and prove that the right architecture gets better as it scales, not worse.

Retrieval Loss (without cost)

Retrieval-Loss = −log₁₀(Hit@K) + λ · (Latency_p95 / 100 ms)Where:

Hit@K = probability that the correct memory is in the top-K returned set

Latency_p95 = tail latency in milliseconds

λ (lambda) = weight that says "every 100 ms of extra wait feels as bad as dropping Hit@5 by one decade"

In practice, λ ≈ 0.5 makes the two terms comparable

Extended Three-Term Formula (with cost):

Retrieval-Loss = −log₁₀(Hit@K) + λL · (Latency_p95 / 100 ms) + λC · (Token_count / 1,000)Where:

λL = weight for latency (typically 0.5)

λC = weight for cost (typically 0.01)

Token_count = total number of prompt tokens attributable to retrieval

Recommended Default Values:

λL = 0.5 (latency weight)

λ$ = 1.0 (cost weight when normalized by $0.10/query)

Key Insights:

This formula was designed to create Kaplan-style scaling law plots for memory layers

Lower values indicate better overall retrieval (high accuracy, low latency)

The formula helps compare different retrieval systems on a single metric

Everyone is engineering context, we're predicting it

Most AI systems today rely on vector search to find semantically similar information. This approach is powerful, but it has a critical blind spot: it finds fragments, not context. It can tell you that two pieces of text are about the same topic, but it can't tell you how they're connected or why they matter together.

At the heart of Papr is the Predictive Memory Graph—a novel architecture that goes beyond semantic similarity to map the actual relationships between information. Instead of just storing memories, we predict and trace the connections between them.

When Papr sees a line of code, it doesn't just index it—it understands that this code connects to a specific support ticket, which relates to a Slack conversation, which ties back to a design decision from three months ago. We build a rich, interconnected web of your entire knowledge base.

This is how we move from simple retrieval to true understanding.

Predictive Memory: Context Before You Need It

The Memory Graph enables something unprecedented: Predictive Memory. Instead of waiting for your agent to search for information reactively, we predict the context it will need and deliver it proactively.

Our system continuously analyzes the web of connections in your Memory Graph, synthesizing related data points from chat history, logs, documentation, and code into clean, relevant packets of "Anticipated Context." This context arrives at your agent in real-time, exactly when it's needed.

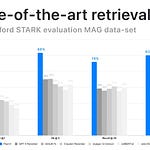

The results speak for themselves, our latest papr-2 model achieved 91% hit@5 (up from 86%) and ranks #1 on Stanford's STaRK benchmark for retrieval accuracy. While other systems suffer from Retrieval Loss as they scale, Papr's predictive memory means that the more data you add, the smarter and more accurate your agents become.

We've turned the scaling problem into a scaling advantage.

What's Possible When AI Remembers?

When AI has connected memory, applications become transformative:

🎯 Customer Support That Actually Knows You

Your support agent remembers every past ticket, conversation, and preference. Instead of forcing customers to repeat themselves, it can proactively say, "I see you had a similar networking issue last quarter—let's start with what we learned there." Frustrating experiences become personal and efficient.

💻 A Coding Partner with Institutional Knowledge

A development agent doesn't just see a function—it sees the pull request that introduced it, the bug it solved, the design discussion that shaped it, and the tests that validate it. Developers can ask complex questions like "Why did we choose this architecture?" and get answers rooted in the project's complete institutional memory.

🤝 A Personal Assistant That Grows With You

Finally, an assistant that connects past and present. It remembers decisions from last month's strategy meeting, recalls your preferred communication style for different stakeholders, and understands the context of your ongoing projects—making it a true collaborator that learns and evolves alongside you.

Getting Started: Built for Developers

Papr integrates seamlessly into your existing stack through our developer-first API. Whether you're building customer support tools, coding assistants, or personal productivity apps, you can add persistent memory in minutes, not months.

Key features:

✅ RESTful API with native SDKs for Python and TypeScript (plus any language via REST)

✅ Real-time context streaming for instant memory access

✅ Fine-grained ACL and permissions with secure sharing controls

✅ Enterprise-grade security with end-to-end encryption

✅ Horizontal scaling that improves performance as data grows

Our Mission: Give intelligence a heart

We believe the future of AI isn't stateless—it's continuous. AI that learns, grows, and remembers. Our mission is to build the foundational memory layer for this future, creating intelligence that is safe, trustworthy, empathetic and genuinely helpful.

An AI that forgets is a tool. An AI that remembers is a partner.

The era of Retrieval Loss ends here. The age of Predictive Memory begins now.

Ready to eliminate Retrieval Loss from your AI systems?

👉 Explore the Papr API and start building today: platform.papr.ai

👉 Join our developer community: https://discord.com/invite/J9UjV23M